📚 Audiblez v4: Generate audiobooks from e-books

Posted on 9 Feb 2025 by Claudio Santini

v4 is out! with Cuda support, new GUI, and many languages now supported 🇺🇸 🇬🇧 🇪🇸 🇫🇷 🇮🇳 🇮🇹 🇯🇵 🇧🇷 🇨🇳!

Thanks to a lot of people spontaneously contributing Pull Requests, we have made a lot of improvements to Audiblez, my little tool to convert ebooks into audiobooks has grown incredibly. Turns out that people really want to use this thing! Finally, it works on CUDA too. I get ~500 chars/sec on Google Colab T4, which is about 6 minutes to convert "Moby Dick" to audiobook.

In v4.0 you'll find:

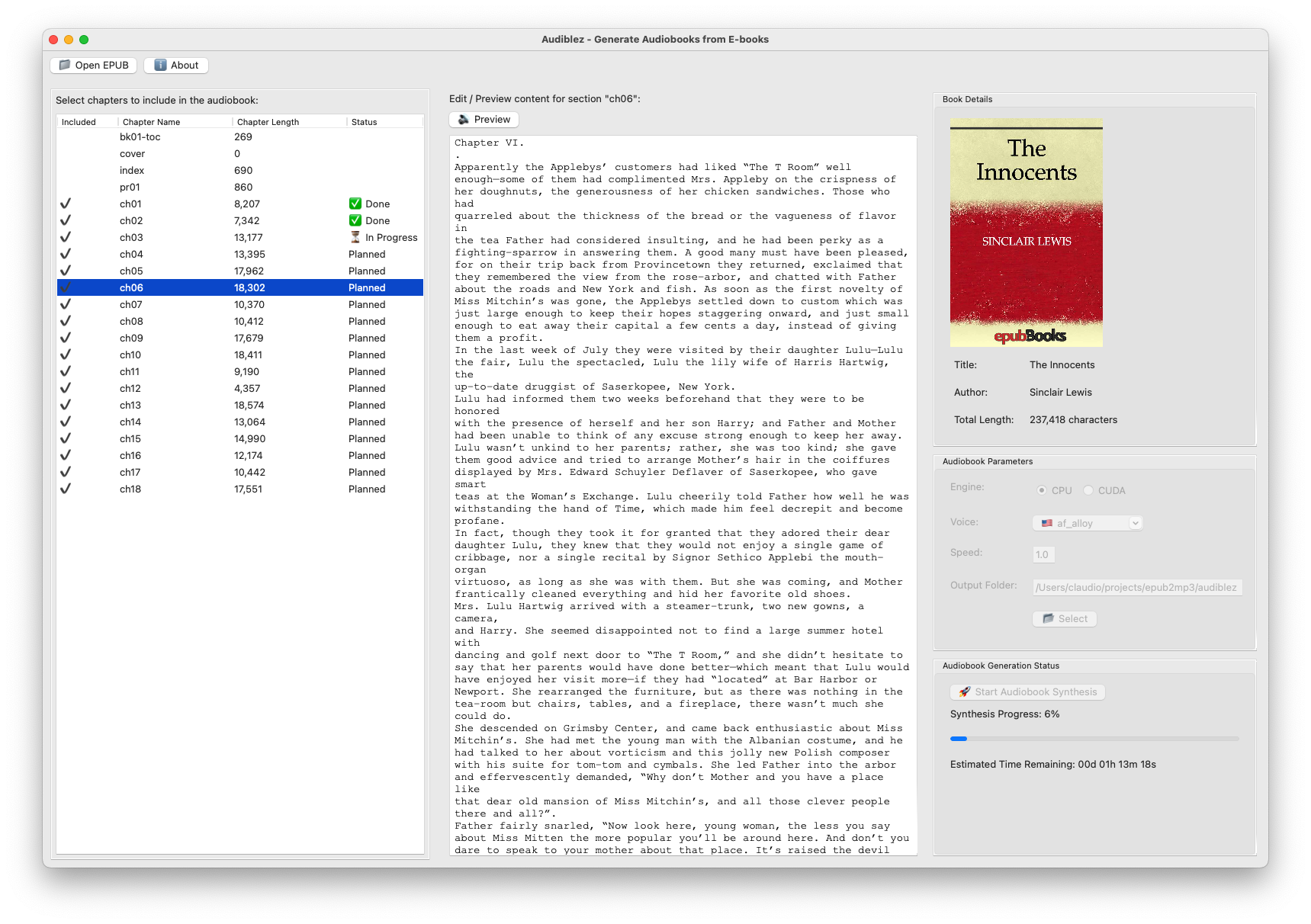

- Multi-platform GUI based on wxWidgets

- Other languages than english finally work great: voices vary in quality and in general English voices are the best, but they are better than most TTS around.

- Moved away ONNX to raw Torch via

kokoropython package - CUDA acceleration is now supported, but Apple Silicon defaults to CPU (no mlx kokoro implementations at the moment)

- Cover image carried over to final audiobook

- Chapter timestamps in the audiobook

- Better support for Windows

Voices Samples

These are some samples of the voices available in Audiblez:

| Voice | Code | Audio |

|---|---|---|

| American English female | af_heart | |

| American English female | af_bella | |

| American English male | am_michael | |

| British English female | bf_emma | |

| British English male | bm_george | |

| Spanish female | ef_dora | |

| Spanish male | em_alex | |

| French female | ff_siwis | |

| Hindi female | hf_alpha | |

| Hindi male | hm_omega | |

| Italian female | if_sara | |

| Italian male | im_nicola | |

| Japanese | jf_alpha |

About Audiblez

Audiblez generates .m4b audiobooks from regular .epub e-books,

using Kokoro's high-quality speech synthesis.

Kokoro-82M is a recently published text-to-speech model with just 82M params and very natural sounding output. It's released under Apache licence and it was trained on < 100 hours of audio. It currently supports these languages: 🇺🇸 🇬🇧 🇪🇸 🇫🇷 🇮🇳 🇮🇹 🇯🇵 🇧🇷 🇨🇳

On a Google Colab's T4 GPU via Cuda, it takes about 5 minutes to convert "Animal's Farm" by Orwell (which is about 160,000 characters) to audiobook, at a rate of about 600 characters per second.

On my M2 MacBook Pro, on CPU, it takes about 1 hour, at a rate of about 60 characters per second.

How to install the Command Line tool

If you have Python 3 on your computer, you can install it with pip.

You also need espeak-ng and ffmpeg installed on your machine:

sudo apt install ffmpeg espeak-ng # on Ubuntu/Debian 🐧

pip install audiblez

brew install ffmpeg espeak-ng # on Mac 🍏

pip install audiblez

Then you can convert an .epub directly with:

audiblez book.epub -v af_sky

It will first create a bunch of book_chapter_1.wav, book_chapter_2.wav, etc. files in the same directory,

and at the end it will produce a book.m4b file with the whole book you can listen with VLC or any

audiobook player.

It will only produce the .m4b file if you have ffmpeg installed on your machine.

How to run the GUI

The GUI is a simple graphical interface to use audiblez. You need some extra dependencies to run the GUI:

sudo apt install ffmpeg espeak-ng

sudo apt install libgtk-3-dev # just for Ubuntu/Debian 🐧, Windows/Mac don't need this

pip install audiblez pillow wxpython

Then you can run the GUI with:

audiblez-ui

Speed

By default the audio is generated using a normal speed, but you can make it up to twice slower or faster by specifying a speed argument between 0.5 to 2.0:

audiblez book.epub -v af_sky -s 1.5

Supported Voices

Use -v option to specify the voice to use. Available voices are listed here.

The first letter is the language code and the second is the gender of the speaker e.g. im_nicola is an italian male voice.

| Language | Voices |

|---|---|

| 🇺🇸 | af_alloy, af_aoede, af_bella, af_heart, af_jessica, af_kore, af_nicole, af_nova, af_river, af_sarah, af_sky, am_adam, am_echo, am_eric, am_fenrir, am_liam, am_michael, am_onyx, am_puck, am_santa |

| 🇬🇧 | bf_alice, bf_emma, bf_isabella, bf_lily, bm_daniel, bm_fable, bm_george, bm_lewis |

| 🇪🇸 | ef_dora, em_alex, em_santa |

| 🇫🇷 | ff_siwis |

| 🇮🇳 | hf_alpha, hf_beta, hm_omega, hm_psi |

| 🇮🇹 | if_sara, im_nicola |

| 🇯🇵 | jf_alpha, jf_gongitsune, jf_nezumi, jf_tebukuro, jm_kumo |

| 🇧🇷 | pf_dora, pm_alex, pm_santa |

| 🇨🇳 | zf_xiaobei, zf_xiaoni, zf_xiaoxiao, zf_xiaoyi, zm_yunjian, zm_yunxi, zm_yunxia, zm_yunyang |

How to run on GPU

By default, audiblez runs on CPU. If you pass the option --cuda it will try to use the Cuda device via Torch.

Check out this example: Audiblez running on a Google Colab Notebook with Cuda .

We don't currently support Apple Silicon, as there is not yet a Kokoro implementation in MLX. As soon as it will be available, we will support it.